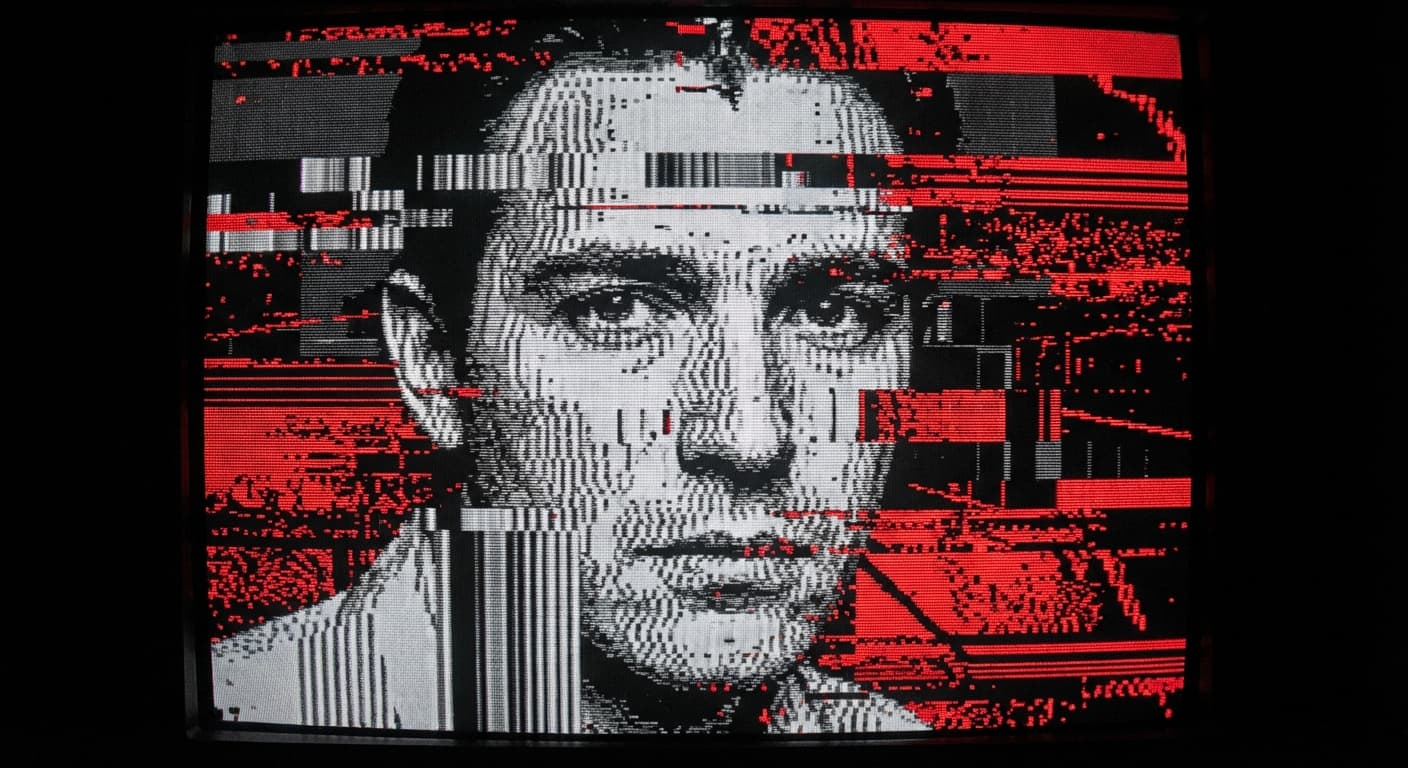

Quick Answer: Deepfakes typically fail at boundaries (blurry edges), texture (plastic skin), eyes (wrong blinking), lighting (mismatched shadows), and temporal consistency (face changes between frames). Most deepfakes show at least one of these tells, though high-quality ones may hide them better.

The Quick Detection Checklist

Before diving into details, here's what to watch for:

- [ ] Blurry or smeared edges around the face

- [ ] Skin that looks too smooth or plastic-like

- [ ] Unnatural blinking (too slow, too fast, or absent)

- [ ] Lighting on the face doesn't match the scene

- [ ] Hair that merges with the background or disappears

- [ ] Teeth that look blurred or malformed

- [ ] Earrings, glasses, or accessories that flicker

- [ ] Head movements that don't match neck movements

- [ ] Audio that's slightly out of sync with lip movements

Not every deepfake shows all these signs. High-quality deepfakes may show none. But most still have at least one tell.

Failure Mode #1: The Boundary Problem

What It Looks Like

The edge where the swapped face meets the original head shows visible seams, blurring, or color differences. Sometimes the face appears to "float" on top of the head rather than being part of it.

Why It Happens

Deepfake algorithms process the face region separately from the rest of the frame. Blending these two regions seamlessly is technically difficult:

- Color matching: The lighting and skin tone of the source face may not match the target

- Edge detection: Algorithms must decide where the face ends and the head begins

- Motion blur: Fast head movements create blur that's hard to replicate at boundaries

What Users Report

"The face looked fine until they turned their head. Then I could see a weird line around the jaw, like the face was a mask sitting on top."

"There's always something wrong at the hairline. The hair either cuts off sharply or blends into the forehead in a strange way."

Detection Tip

Look at the jawline and hairline during head movements. These boundaries are where most blending artifacts appear.

Failure Mode #2: The Texture Problem

What It Looks Like

Skin appears unnaturally smooth, like plastic or wax. Pores, wrinkles, and fine details are missing or smeared. Sometimes there's a subtle "shimmer" as the face moves.

Why It Happens

AI models have difficulty reproducing high-frequency details:

- Training limitations: Models learn from compressed video, losing fine texture detail

- Resolution trade-offs: Higher resolution requires more processing power, so many deepfakes sacrifice detail

- Generalization: Models learn "average" skin rather than individual texture patterns

What Users Report

"The person in the video had perfect skin—too perfect. No pores, no small imperfections. Real people don't look that smooth."

"Her face looked like it was made of fondant. Shiny and uniform in a way that human skin never is."

Detection Tip

Compare the skin texture of the face to exposed skin on hands or neck. If the face is significantly smoother, it's suspicious.

Failure Mode #3: The Eye Problem

What It Looks Like

Eyes don't blink naturally—either too little, too much, or with strange timing. Gaze direction may not match where the person should be looking. Eye reflections may be inconsistent or missing.

Why It Happens

Eyes are technically challenging for several reasons:

- Blink patterns: Early deepfake models famously forgot to include blinking because training images rarely captured mid-blink

- Gaze consistency: The swapped face may have been looking in a different direction than the target

- Reflections: The small reflections in eyes (catchlights) should match the scene's lighting

What Users Report

"They didn't blink for 30 seconds. That's when I knew something was wrong."

"The eyes were looking slightly to the left of where they should have been. Like they were staring past whoever they were talking to."

Detection Tip

Count blinks over 30 seconds. Normal adults blink 15-20 times per minute. Significantly less (or significantly more with unnatural rhythm) is a red flag.

Failure Mode #4: The Lighting Problem

What It Looks Like

The lighting on the face doesn't match the rest of the scene. Shadows fall in wrong directions. The face appears "lit from within" or has lighting that doesn't respond to the environment.

Why It Happens

Source and target videos are usually recorded under different lighting:

- Shadow direction: The source face may have shadows from the left while the scene has light from the right

- Color temperature: Indoor and outdoor lighting have different colors

- Reflection patterns: Shiny surfaces on the face should reflect the scene, not the original recording environment

What Users Report

"The room was clearly lit from a window on the right, but the shadows on his face went the wrong direction. Like his face was in a different room."

"Her face looked like it was glowing compared to everything else in the frame."

Detection Tip

Look at where shadows fall on the face versus shadows in the rest of the scene. They should match.

Failure Mode #5: The Temporal Problem

What It Looks Like

The face changes subtly from frame to frame in ways that don't match natural movement. Features may "drift" over time. The face may flicker or shimmer. Sometimes called "temporal coherence breakdown."

Why It Happens

Deepfake models process each frame independently or in small batches:

- Frame independence: Each frame is generated separately, without enforcing consistency with adjacent frames

- Tracking errors: Face tracking may lose the face momentarily, causing jumps

- Identity drift: The model's representation of the face may shift over time

What Users Report

"If you watch carefully, their nose gets slightly longer over the course of the video. It's gradual, but by the end they look like a different person."

"There's a weird shimmer on the face, like the details are being recalculated every frame."

Detection Tip

Take screenshots from the beginning and end of a video. Compare the same facial features—they should be identical in proportion and position.

Failure Mode #6: The Alignment Problem

What It Looks Like

The face doesn't properly align with head movements. When the head turns, the face may lag behind or shift unnaturally. Expressions may not match the context or timing of speech.

Why It Happens

Aligning a 2D face image to 3D head movements is mathematically complex:

- Pose estimation errors: The algorithm may misjudge the head's angle

- Expression mismatch: The source face expression may not match what the person should be expressing

- Timing issues: The face swap may be slightly behind or ahead of actual movements

What Users Report

"When they nodded, the face seemed to slide on top of their head, like it was attached with a delay."

"They were supposed to be angry, but the face had this neutral expression that didn't match the body language at all."

Detection Tip

Watch during rapid head movements. Alignment problems are most visible when the head turns quickly.

Failure Mode #7: The Audio Sync Problem

What It Looks Like

Lip movements don't perfectly match the audio. Words appear to be spoken slightly before or after the lips move. Complex sounds (like "P," "B," or "M") may not show proper lip closure.

Why It Happens

Audio and video are often processed separately:

- Timing offset: The face swap may introduce small delays

- Phoneme errors: The model may not know which lip shapes correspond to which sounds

- Resolution: Lips may be too low-resolution to show precise movements

What Users Report

"It was like watching a dubbed movie. The words didn't quite line up with the mouth."

"They said 'please' but their lips never came together. That's impossible for a real person."

Detection Tip

Watch for words with obvious lip closures (B, M, P). Real speakers must close their lips for these sounds.

Failure Mode #8: The Accessory Problem

What It Looks Like

Glasses, earrings, hats, or other accessories behave strangely. They may flicker, disappear momentarily, or not move naturally with the head.

Why It Happens

Accessories are difficult for face-swapping algorithms:

- Occlusion: Glasses partially cover the face, confusing face detection

- Transparency: Glasses are semi-transparent, making them hard to process

- Motion tracking: Small accessories may not be tracked accurately

What Users Report

"Their glasses kept flickering. Sometimes they were there, sometimes they kind of faded out."

"The earring on the left side was there for the whole video, but the one on the right kept disappearing and reappearing."

Detection Tip

Watch edges of glasses, especially the frames near the temples. Flickering or distortion here is common.

Failure Mode #9: The Hair Problem

What It Looks Like

Hair doesn't behave naturally. It may appear to merge with the background, have strange edges, or not move correctly with head movements. Flyaway hairs may be missing or frozen in place.

Why It Happens

Hair is one of the hardest things for any image processing algorithm:

- Fine detail: Individual strands are too small for most models to handle

- Motion: Hair moves independently of the head in complex ways

- Background blending: The boundary between hair and background is inherently fuzzy

What Users Report

"The hair looked like it was painted on. No individual strands, no movement. Just a solid blob."

"Where the hair met the background, it kept glitching out. Like the algorithm couldn't decide what was hair and what wasn't."

Detection Tip

Look at flyaway hairs and the hair-background boundary. These areas often reveal processing artifacts.

Why Quality Varies So Much

Not all deepfakes have these problems. Quality depends on several factors:

| Factor | Impact on Quality |

|---|---|

| Source material quality | High-resolution, well-lit source footage produces better results |

| Training data amount | More images of the target face = better face swap |

| Processing time | More time per frame = fewer artifacts |

| Model sophistication | Newer models fix problems older ones had |

| Operator skill | Experienced users know how to minimize problems |

A well-funded production can create deepfakes with few visible artifacts. Quick, amateur deepfakes often show multiple failure modes.

The Detection Arms Race

It's worth noting that as detection methods improve, so does generation technology. The failure modes described here were more common in 2020-2023 deepfakes. Newer generations may have fixed some issues while introducing others.

What doesn't change is the principle: deepfakes are reconstructions, and reconstructions have limits. Current technology may not show the same artifacts described here, but it will have artifacts of some kind.

When Detection Fails

Sometimes you can't tell. High-quality deepfakes viewed on small screens, with low resolution, after social media compression may show no visible artifacts. In these cases:

- Verify the source: Is this from a trusted outlet?

- Check for corroboration: Is this the only source for this content?

- Consider context: Does this content seem designed to provoke strong emotions?

- Use detection tools: AI-based detectors can catch things humans miss

No single method is foolproof. Detection requires multiple approaches.

Summary

Deepfakes fail in predictable ways. The technology struggles with boundaries, texture, eyes, lighting, temporal consistency, alignment, audio sync, accessories, and hair. Knowing these failure modes helps you spot fakes—but remember that technology improves constantly, and the absence of obvious artifacts doesn't guarantee authenticity.

The best approach combines visual inspection with source verification and healthy skepticism. When something feels wrong, trust that instinct and investigate further.

Related Topics

- Temporal Coherence Breakdown – Why AI video feels unstable over time

- Why AI Characters Are Hard to Keep Consistent – Identity drift explained

- Why AI Video Feels Almost Right but Not Quite – The uncanny valley in AI generation

- Top Trade-offs Between Quality and Stability in AI Generation – Understanding AI generation limitations

- AI Video Failure Modes Index – Complete catalog of AI video problems